Regression analysis is a cornerstone of predictive modeling, crucial for extracting valuable insights from data and making informed decisions. As the field evolves, advanced techniques are increasingly integral to uncovering deeper insights and improving the accuracy of predictions. This article explores some of the most advanced techniques in regression analysis, highlighting their applications and benefits for predictive modeling.

1. Regularization Techniques

1.1 Lasso Regression (L1 Regularization)

Lasso (Least Absolute Shrinkage and Selection Operator) regression applies L1 regularization, which adds a penalty equal to the absolute value of the magnitude of coefficients. This technique promotes sparsity in the model by reducing some coefficients to zero, effectively performing feature selection. Lasso is particularly useful when dealing with high-dimensional datasets where feature selection is crucial.

1.2 Ridge Regression (L2 Regularization)

Ridge regression introduces L2 regularization, adding a penalty proportional to the square of the magnitude of coefficients. Unlike Lasso, Ridge regression doesn’t eliminate features but rather reduces their influence, which helps in managing multicollinearity and improving model stability. It is effective when all features are expected to contribute to the prediction.

1.3 Elastic Net Regression

Elastic Net combines the penalties of both Lasso and Ridge regression, balancing feature selection and coefficient shrinkage. It is particularly useful when dealing with datasets where there are correlations between features. Elastic Net’s flexibility allows it to adapt to different types of data structures.

2. Polynomial Regression

Polynomial regression extends linear regression by adding polynomial terms to the model, allowing it to capture non-linear relationships between predictors and the target variable. This technique can model complex, curvilinear relationships that linear models might miss. However, caution is needed to avoid overfitting, especially with higher-degree polynomials.

3. Quantile Regression

Quantile regression focuses on modeling different quantiles (e.g., median) of the target variable distribution rather than the mean. This technique provides a more comprehensive view of the relationship between predictors and the target variable, especially when the data is skewed or contains outliers. It’s beneficial for understanding the impact of predictors across various points of the distribution.

4. Robust Regression

Robust regression techniques are designed to handle outliers and deviations from assumptions more effectively than traditional linear regression. Methods like Huber regression and RANSAC (Random Sample Consensus) are robust to outliers and can produce more reliable estimates when the data includes anomalies or non-normal error distributions.

5. Stepwise Regression

Stepwise regression involves adding or removing predictors based on their statistical significance. The process can be forward (adding predictors), backward (removing predictors), or bidirectional (a combination of both). This method is useful for model selection and improving model interpretability but should be used cautiously to avoid overfitting.

6. Bayesian Regression

Bayesian regression incorporates prior distributions on the coefficients and updates these beliefs with data to form posterior distributions. This technique provides a probabilistic view of the regression coefficients and allows for incorporating prior knowledge and quantifying uncertainty in predictions.

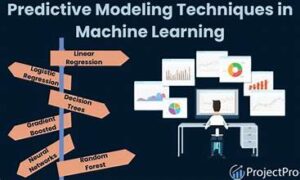

7. Machine Learning-Based Regression Techniques

7.1 Support Vector Regression (SVR)

Support Vector Regression applies the principles of Support Vector Machines (SVM) to regression problems. It aims to find a function that deviates from the actual target values by no more than a specified margin. SVR is effective for non-linear relationships and can handle high-dimensional data well.

7.2 Decision Tree Regression

Decision tree regression partitions the feature space into regions with constant predictions, creating a tree-like model of decisions. This technique can capture complex relationships but may suffer from overfitting. Ensemble methods like Random Forest and Gradient Boosting can mitigate this issue by combining multiple decision trees to improve accuracy and robustness.

7.3 Neural Networks and Deep Learning

Neural networks and deep learning models, including feedforward neural networks and recurrent neural networks, have shown remarkable performance in regression tasks. These models can capture intricate patterns and interactions between features through multiple layers of abstraction, making them suitable for complex predictive modeling tasks.

8. Feature Engineering and Selection

Effective feature engineering and selection are critical for improving regression model performance. Techniques such as Principal Component Analysis (PCA), Recursive Feature Elimination (RFE), and feature scaling can enhance model accuracy and interpretability. Properly engineered features can significantly impact the model’s ability to uncover meaningful patterns in the data.

Conclusion

Advanced techniques in regression analysis are essential for unlocking deeper insights and enhancing the accuracy of predictive models. From regularization methods and polynomial regression to machine learning-based techniques and feature engineering, each approach offers unique advantages for handling different types of data and prediction challenges. By leveraging these advanced techniques, data scientists and analysts can build more robust and insightful predictive models, driving better decision-making and strategic planning.